The AI Governance Landscape

The passing of the European Union’s Artificial Intelligence Act (AI Act) in 2024 marked a key milestone in the governance of AI. The Act is one of the first general legal frameworks on AI anywhere in the world, and some believe it will shape the future of AI regulation globally. It establishes obligations for market actors based on the potential risks and level of impact posed by different AI systems. For “high-risk” systems, a range of mandatory requirements (including a conformity assessment) must be met before a system can be placed on the market. The Act also places specific obligations on providers of general-purpose AI models (also known as foundation models).

In the United States, policymaking on AI has developed from a relatively hands-off agency-by-agency approach towards a more coherent regime. In 2023, President Biden issued an Executive Order on Safe, Secure and Trustworthy AI. The Order sets out requirements for AI transparency, testing, and cybersecurity measures, and directs a major programme of work across the federal government. Alongside White House initiatives, regulators such as the Federal Trade Commission (FTC) have shown increasing willingness to investigate and intervene in AI markets. Nevertheless, Congress is yet to pass any AI-specific legislation, and we are likely to see more state-level policymaking on AI in the years to come.

Elsewhere around the world, other governments have proposed comprehensive legislation on AI. Canada's draft AI and Data Act follows a similar risk-based approach to that of the European Union, and Brazil has published an AI Bill that would establish a regulatory regime, including civil liability for AI providers. These efforts stand in contrast to more voluntarist approaches taken by countries like Singapore, which has developed various initiatives for responsible AI deployment.

Beyond national level regimes, there is a growing focus on international cooperation on AI governance. In October 2023, under what is known as the Hiroshima Process, G7 leaders agreed Guiding Principles and a Code of Conduct for organisations developing the most advanced AI systems, including foundation models and generative AI systems. In November 2023, the UK government brought together governments and some leading AI companies to set the agenda for future international cooperation on AI safety. The Bletchley Declaration was signed by all countries attending the summit, including China and the United States.

Over the next few years, decision makers will determine the institutional arrangements that will govern AI globally. Some experts suggest that rather than a single institution, we will likely see the emergence of a regime complex comprising multiple institutions within and across several functional areas.

Global Policy Venues

Bletchley Declaration

In 2023, the UK government brought together actors from across governments, leading AI companies, civil society, and academia to set the agenda for future international cooperation on AI safety. The Bletchley Declaration was signed by all countries attending the summit, including China and the United States. A second AI Safety Summit was held online in May 2024, and will be followed by a further AI Action Summit to be held in France in February 2025.

United Nations

In October 2023, the United Nations Secretary-General António Guterres announced the creation of a High-level Advisory Body on AI to analyse and advance recommendations for the international governance of AI. The Advisory Body will publish its final report ahead of the Summit of the Future to be held in 2024.

Within the UN system, the ITU and UNESCO have recently led in inter-agency working group on AI. In 2024, the working group published a White Paper analysing the UN system's institutional models, functions, and existing international normative frameworks applicable to global AI governance.

OECD

The Organisation for Economic Co-operation and Development (OECD) continues to be a key forum for the development of AI governance and assurance. In recent months, there has been considerable convergence around OECD definitions and benchmarks, and nominally risk-based regimes. This includes the updated definition of an AI system (2023):

“An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.”

G7 and the Hiroshima Process

In April 2023, Japan hosted a meeting of the G7 Digital and Technology Ministers meeting where leaders agreed to prioritise collaboration for inclusive governance of the most advanced AI technologies. In October 2023, they agreed on the Hiroshima Process International Guiding Principles for Organizations Developing Advanced AI Systems, and the Hiroshima Process International Code of Conduct for Organizations Developing Advanced AI Systems. Both the Principles and the Code of Conduct will provide guidance for organisations developing and deploying the most advanced AI systems, including foundation models and generative AI systems.

Key global policy documents

UN General Assembly resolution: Seizing the opportunities of safe, secure and trustworthy AI for sustainable development (2024)

AI Advisory Body interim report: Governing AI for Humanity (2023)

Hiroshima Process International Guiding Principles for Organizations Developing Advanced AI System (2023)

UNESCO Recommendation on the Ethics of Artificial Intelligence (2021)

OECD AI Principles (2019; updated in 2024)

European Union

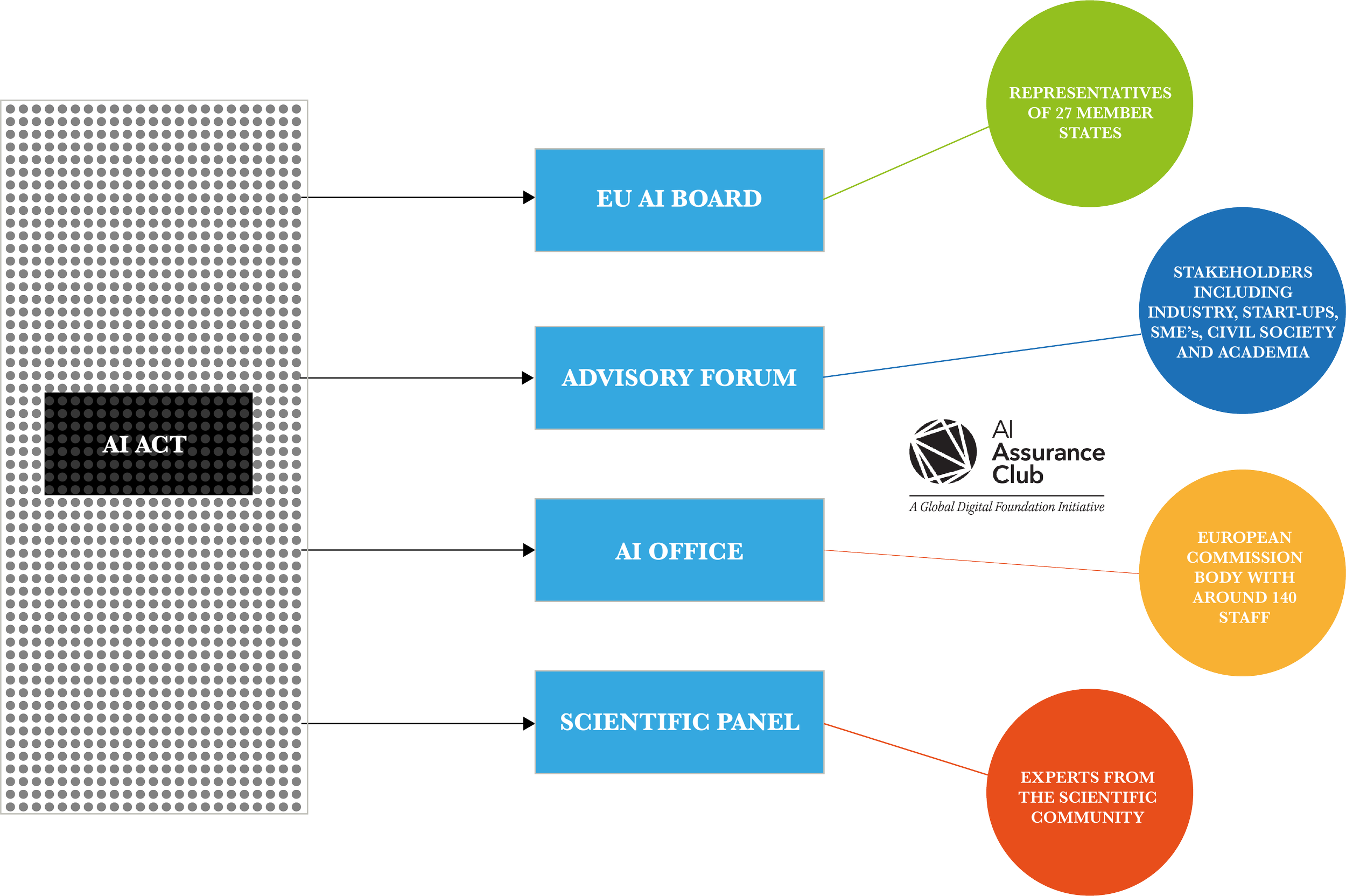

Now that the AI Act has become law, its provisions will come into force over a 36-month transition period. Key bodies are being established to oversee and drive implementation of the Act.

The European AI Office

Launched in June 2024, the European AI Office will serve as the foundation of the AI Act’s governance structure. The AI Office is part of the European Commission and has around 100 staff. It will support the relevant authorities in Member States in overseeing the implementation of the AI Act, and will directly enforce the rules for general-purpose AI models. Its powers include the ability to conduct evaluations of general-purpose AI models, request information from model providers, and apply sanctions.

The AI Board

The AI Act establishes the European Union AI Board whose membership comprises one representative from each Member State. The AI Board serves as a coordination mechanism between national “competent authorities” (or regulators). Its primary purpose is to facilitate consistent and effective application of the AI Act across Member States. To do this, it will collect and share technical and regulatory expertise and best practice.

The Scientific Panel

The AI Act requires the European Commission to establish a scientific panel of independent experts who will support enforcement of the regulation. The panel will advise the AI Office on activities including its assessments of the risks posed by general-purpose AI models.

The Advisory Forum

The AI Board and the AI Office will also be advised by a forum of stakeholders, including representatives from industry, SMEs, civil society and NGOs, and academia. Permanent members of the forum include: ENISA (the EU cybersecurity agency), the EU’s Fundamental Rights Agency, and the three European Standardisation Organisations (ESOs): CEN, CENELEC, and ETSI.

Key European Union documents

Final text of the AI Act (2024)

C(2023)3215 – Standardisation request M/593 (2023)

Communication on boosting startups and innovation in trustworthy artificial intelligence (2024)

While the AI Act is the most significant piece of legislation, it is not the only instrument governing the development, adoption, and use of AI. Various other horizontal and sectoral instruments place obligations on providers of AI systems.

AI Liability Directive (draft)

Cyber Resilience Act (draft)

New Product Liability Directive (close to adoption)

Digital Services Act (in force)

Digital Markets Act (in force)

General Data Protection Regulation (in force)

The Role of Standards

Unlike legislation, standards are voluntary and developed by consensus in independent organisations. As well as product standards, international standards can take the form of test methods, codes of practice, guideline standards and management systems standards.

While the AI Act sets out what organisations must do to meet legal requirements, harmonised standards will provide technical specifications detailing exactly what is expected of regulated entities. In May 2023, the European Commission adopted its standardisation request on AI which was accepted by CEN and CENELEC. Work is currently underway in CEN-CENELEC Joint Technical Committee 21 (pictured above) to develop these standards by the deadline of April 2025. Much of this work is informed by relevant existing ISO/IEC standards on AI. Adherence to these harmonised standards will provide a presumption of conformity for providers of high-risk AI systems.

General-purpose AI models are not yet covered by any international standards or included in any European Commission standardisation requests. To enable compliance with the Act in the meantime, the AI Office will facilitate the development of non-binding Codes of Practice for general-purpose AI.

Photograph: members of the CEN CENELEC JTC21 Committee meet for a plenary session at the University of Bath, June 2024